24 / 06 / 04

June 4th Some Thoughts

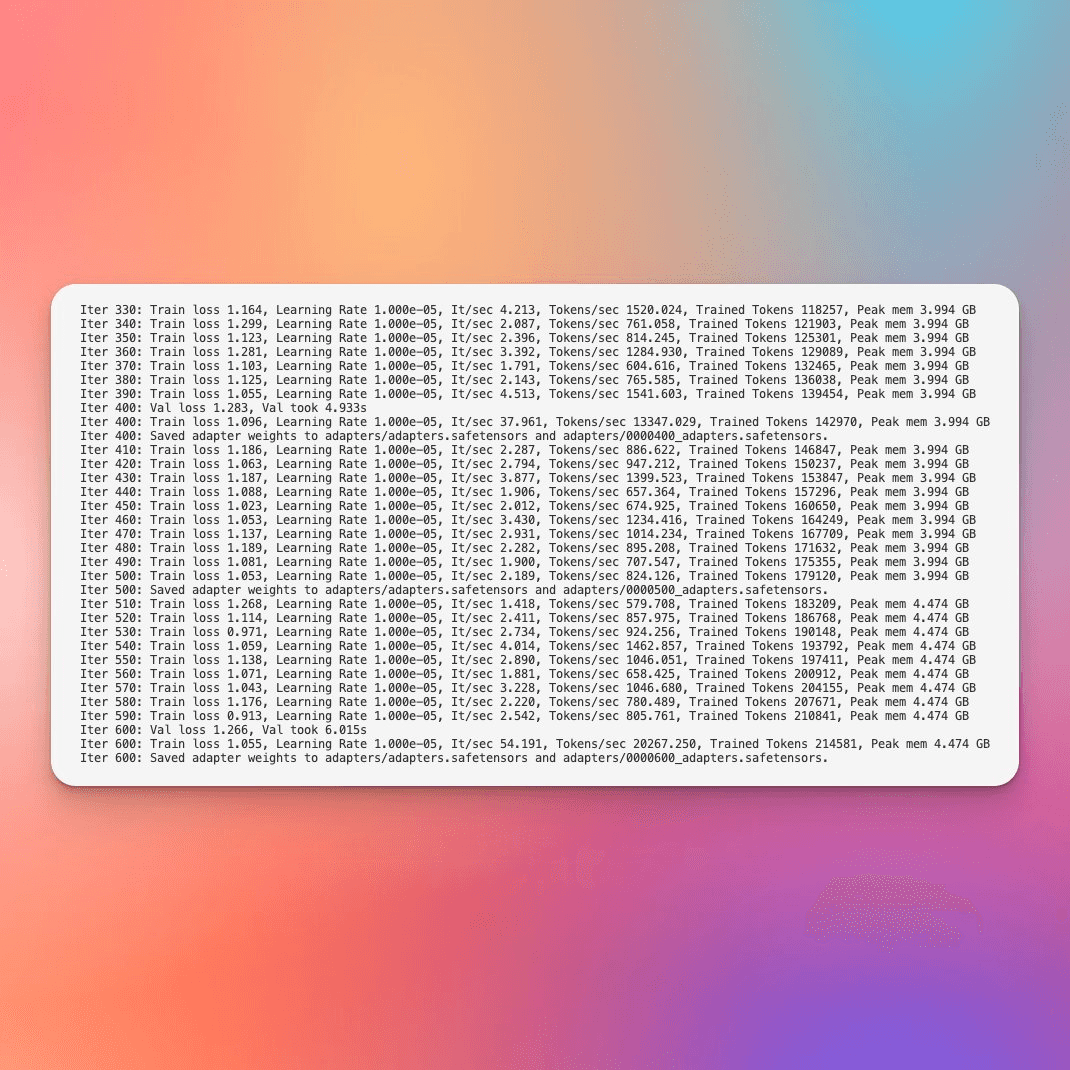

Today, I took a careful look at the mlx training framework launched by Apple official, and found it very interesting. It can train and deploy models locally on MacBook, which feels very cool. I used my 32GB M2 Max notebook to test the Qwen1.5-0.5B model, ran the official sample data, and it worked, which I think is quite good. If you want to train models in the future but suffer from lack of GPU resources, mlx is a very good choice. It's just that if you want to train a slightly larger model in the future, you may need to choose a very high configuration when changing computers. I guess there should be no problem training a 7B model with a 32GB MacBook LoRA? 😂 Below is the screenshot of my training:

Today, I also looked at the official code examples of CrewAI and LLM From Scratch, which are quite useful for me to familiarize with the code, after all, I haven't written code for a long time😂

Today, I also looked at the official code examples of CrewAI and LLM From Scratch, which are quite useful for me to familiarize with the code, after all, I haven't written code for a long time😂